Ari Cohn knows that social media platforms can seem like a daunting new frontier of technology — but he also believes that if we pay attention, we’ll see that a pattern that is far from novel.

“My parents grew up when TV was just coming into the household,” he recalled. “But by the time I was born … they had learned how to incorporate it into their lives. It wasn't as big of a deal as the old people at the time thought it was, and we're going through that same exact thing with social media.”

Cohn is an attorney specializing in free speech and works as free speech counsel at TechFreedom, a think tank dedicated to protecting civil liberties in the online space. Increasingly, the arena where free expression is being exercised — or restricted — is virtual.

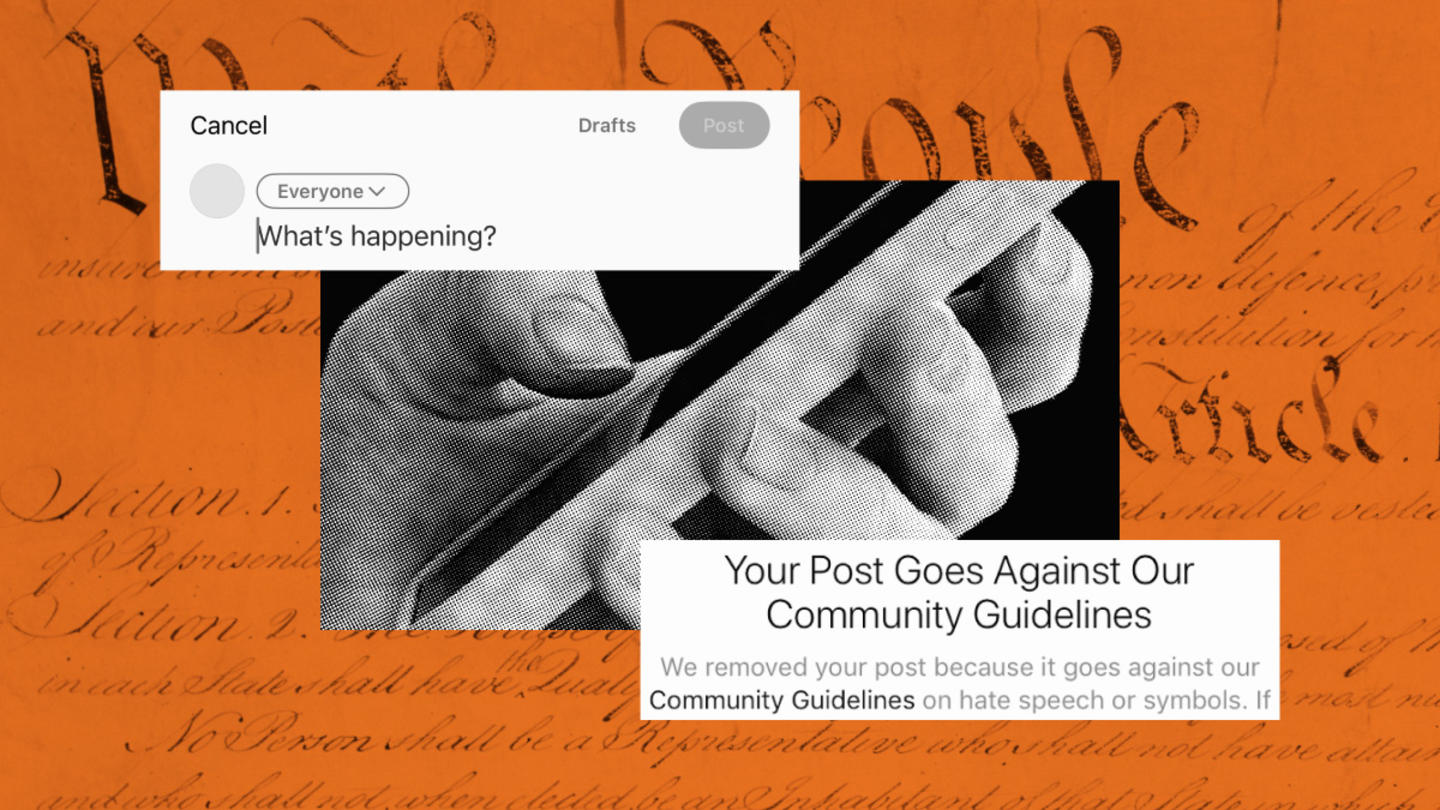

Perhaps no social platform has ignited more discussion about the implications of censorship than X. Since purchasing Twitter and rebranding it as X, Elon Musk has embarked on a philosophy of “free speech absolutism," including controversial moves such as unbanning users previously blacklisted from the platform. Musk’s actions have sparked lively debates over the responsibilities of users versus administrators to moderate free speech online — but there’s more to the issue than meets the eye, Cohn said.

For one, online speech covers a lot. It includes everything from dance memes and news stories to family photos and influencer videos. But there are also practices and content that raise concerns, including questions over how to address problems ranging from bullying to misinformation.

The sheer volume of content that appears on the internet each day — by some estimates, X publishes 500 million new posts daily — makes content moderation extremely complicated. The complexity increases as different platforms serve diverse communities, each with unique reasons for coming to the internet. The critical question is how to effectively address harmful ideas without stifling beneficial ones.

Online content moderation is far from a simple debate. There’s no such thing as a one-size-fits-all solution. But Cohn has a proposal that he believes empowers users to reap the benefits of democratizing information online on their own terms: showing users how to become their own content moderators, on an individual basis.

We sat down with Cohn to discuss the nuances and complex considerations at play when considering online free speech practices, including the effects on society at large — and how individuals can be more shrewd users in everyday life.

Top-down moderation: the risks and roadblocks

Free speech moderation isn’t a question that’s unique to X — or even unique to the internet, for that matter.

“This isn't exclusive to Elon Musk,” Cohn said. “It's not just on social media platforms. We should care about free expression everywhere. It is incumbent upon us to consider opposing views and really think critically about what we believe.”

Other sites like Substack, Reddit, and Instagram all have different philosophies guiding how they approach community and user expression. But focusing on any one platform misses the point. The question is about where decisions about content are made. Is it happening among a small, elite group of developers? Or are the decisions in the hands of the users themselves? Centralized moderation inherently means that decisions about content are being made far away from the people who are actually participating in a group, thread, or forum.

The results can be chaotic, even if they’re well-intentioned. Blanket policies limiting content can fail to recognize just how nuanced and individualized sweeping categories like “offensive” or “graphic” can be.

For instance, Instagram and Facebook continue to censor posts from breast cancer survivors intended to educate and raise awareness, mistaking them for sexual content.

Cohn points out that the sheer scale of content being poured onto the internet every day — if not every minute — makes broadscale, top-down moderation on the part of platforms themselves largely impossible. Instead, he advocates for each individual user to become their own personal moderation team.

“The more individual control you have, the more likely it is that a greater number of people will [be comfortable engaging online] because they'll be able to dial it in themselves,” Cohn explained.

Empowering users to design their own feeds

Transferring online content moderation into the hands of individuals can seem like a daunting shift — but if done mindfully, it is one that can empower users with the autonomy to become savvy, knowledgeable content consumers.

This necessitates an array of tools and options that allow users to shape their online experiences. Many of these are still in development, but Cohn is optimistic. He points to Bluesky, a promising social media platform that allows users to create their own content moderation algorithms. Subsequent users can then choose from these to elect how they want their content to be moderated. Similarly, X’s Community Notes allow users to add context to misleading or misinformed posts, and Substack allows writers to moderate and censor responses to their own posts as they see fit.

Of course, there are potential disadvantages to leaving content moderation to the users themselves. It’s human nature to shy away from considering or engaging with viewpoints that differ or directly conflict with our own.

Society stands to gain from being open to ideas different from their own, Cohn said. This doesn’t mean abandoning one’s principles or even changing one’s mind regarding a topic. It just means taking on a broader, more holistic understanding of all the angles an issue can encompass.

“We have to understand what we believe,” Cohn said. “We can really only do that by winnowing out what we don’t believe.” That means critical thinking — i.e., “constructing arguments against our own views and [listening to] the views other people hold.”

That isn’t to say that all views should be considered equally, particularly ones that are unequivocally racist, sexist, or otherwise misinformed. Cohn cites child pornography and abuse, as well as documented beheadings, as examples of content that has no place on the internet, no matter a user’s preference.

But within the realm of legitimate opposition, “we are better off encountering and engaging with and wrestling with people and ideas we disagree with greatly,” Cohn said. “We should all try to do it, even if it makes us a little bit uncomfortable. That's how we learn, and that is how we solidify our own beliefs.”

At the end of the day, empowering users with the autonomy to determine their own online experience may ultimately mean that many will decide not to engage with new people or different ideas.

However, others will engage, and the fact that they can do so consciously and intentionally — rather than being dictated by top-down, centralized policies — makes all the difference.

“[Individualized moderation] means that maybe they won't come across some accounts that they disagree with, but that's okay,” Cohn said. “Everyone's got their different comfort levels. But I think that's a much more elegant approach than a company saying, ‘Here are our content policies. We’re going to enforce them at our discretion.’”

Sign up for the Stand Together newsletter and get stories, ideas, and advice from changemakers to help you tackle America’s biggest problems.

Being your own moderator can be daunting. Here’s how to start.

The first step to becoming a self-sufficient, savvy consumer is to understand that “content” itself, particularly the type of content that comes into question under free speech moderation, encompasses many different categories. Although it can be easy to subconsciously group all threatening or offensive content together, there are very real legal differences in how they are viewed, and users should take the time to recognize these nuances.

Cohn explained that although the First Amendment discerns between protected speech and unprotected speech, the same categories don’t quite apply on social media platforms. Although a user may be used to trusting a newspaper or magazine article they read, many social platforms have the right to broadcast everything from hate speech to misinformation to plain bullying, all of which users should be on the lookout for.

It’s also helpful to realize that the current array of social media platforms — from X, to Facebook, to Substack, to Reddit, and beyond — allow the internet to participate in a competitive marketplace of sorts. Being able to compare the content policies and moderation practices of different platforms can help users be more shrewd and holistic in their views.

Cohn advised users to engage in active, purposeful reflection when encountering content they’re uncomfortable with.

“I think that we should really get down deep and say, ‘Is this something that just makes me uncomfortable, or is this something that just by virtue of being seen poses some kind of danger?’” he said. “The line for that is different for every person, and you'll never get everyone to agree on where that line is, but I think it's useful for us all to individually think about that … It can help us really think about whether we're avoiding ideas we don't like or trying to avoid actual negative externalities.”

The future of free speech is in users’ hands

Looking forward, there’s reason to be both cautious and hopeful. Cohn is particularly interested in the promise that Bluesky’s policies have and the potential for a standardized system of self-moderation to possibly bleed into the larger platforms’ menus as well. He envisions other platforms adopting solutions developed on Bluesky, creating a cyclical effect in which platforms build off each others’ innovations and create a communal effort towards individual moderation across the internet.

Ultimately, the future is in our hands — both as users online and as voters at the polls.

“I try not to worry about content moderation tools as much as I do the implementation and outcomes,” Cohn said. “A tool is one thing, but the policy behind it and the attitudes of the people who are implementing it have far more impact on what actually happens. I think the more important thing is to see what the people who are in charge of implementing and supporting them are doing — and what the policies underlying the content moderation tools are.”

No matter where policies land, however, Cohn believes in the ability of younger generations to take up the call to self-moderate free speech, as the scope of the Internet grows beyond what any of us can predict in the decades to come.

“The kids are going to be all right,” he said. “They're going to figure out how to integrate this stuff into their normal lives. It's going to be an adjustment and yes, there might be growing pains. But this is not something we haven't dealt with before.”

***

TechFreedom is supported by Stand Together Trust, which provides funding and strategic capabilities to innovators, scholars, and social entrepreneurs to develop new and better ways to tackle America’s biggest problems.

Learn more about Stand Together’s free speech efforts and explore ways you can partner with us.

Greg Lukianoff and Rikki Schlott’s new book The Canceling of the American Mind dives into cancel culture’s origins and ways to fight it.

Protecting free speech online is vital. Free speech expert Jacob Mchangama shares how the U.S. can do that.

Free speech is under attack. These organizations are pushing back.